The correct blur

30 Jul 2017In the past week, I learned that the RGB values stored in digital media are not the same as they were recorded. This isn’t some lossy compression algorithm artifact, but rather a clever tactic. The range of all possible intensity values is reduced.

In the days when storage spaces were tiny and these adjustments helped. But the same adjustments can hinder photo editing processes.

Here’s the full explanation by Henry Reich (minutephysics).

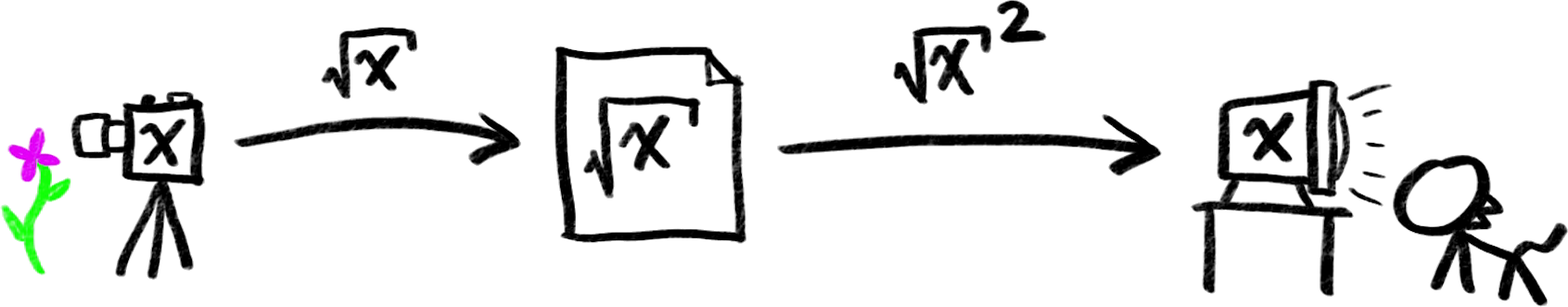

The gist of the process is to take square-roots of the raw pixels when saving the file and when displaying the stored files, square the stored values (since they were rooted initially).

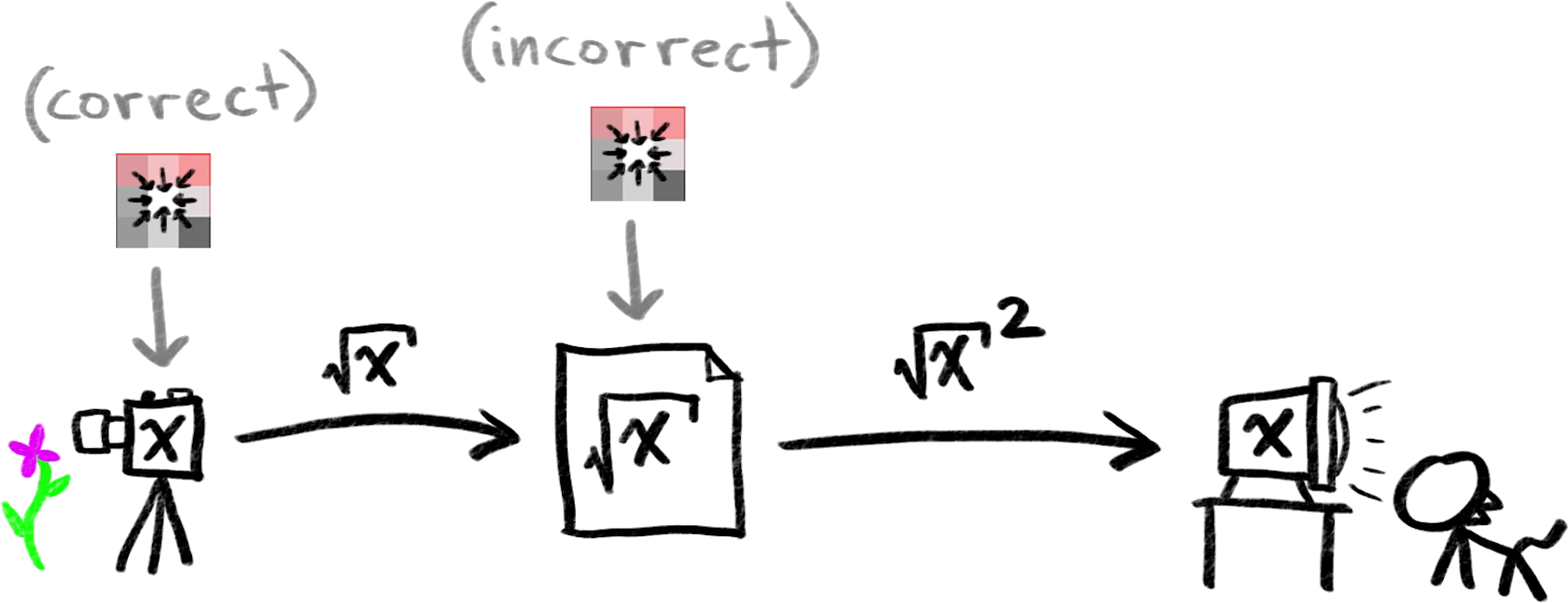

The problem occurs when editing a picture involves direct pixel manipulations. Any transform can yield bad results if applied incorrectly.

Blurring, for instance, is done by replacing each pixel with an average of the pixel and the neighboring pixels. The averaging must be applied to the raw pixels, not their square-roots.

This means that squaring be done before the kernel convolutions and then take roots before saving the file.

I’ve made a simple tool to visualise the difference that squaring the pixels before transformations makes. You can use it here.

Is it worth it?

Not really.

This is something that you never even knew you never knew. And now that you do know about it, this might bug you a little.

But the real problem is speed. A simple 16MP image has more than a million pixels. Each of those pixel must be squared and rooted. And as image sizes grow, these extra steps will slow things down further down.